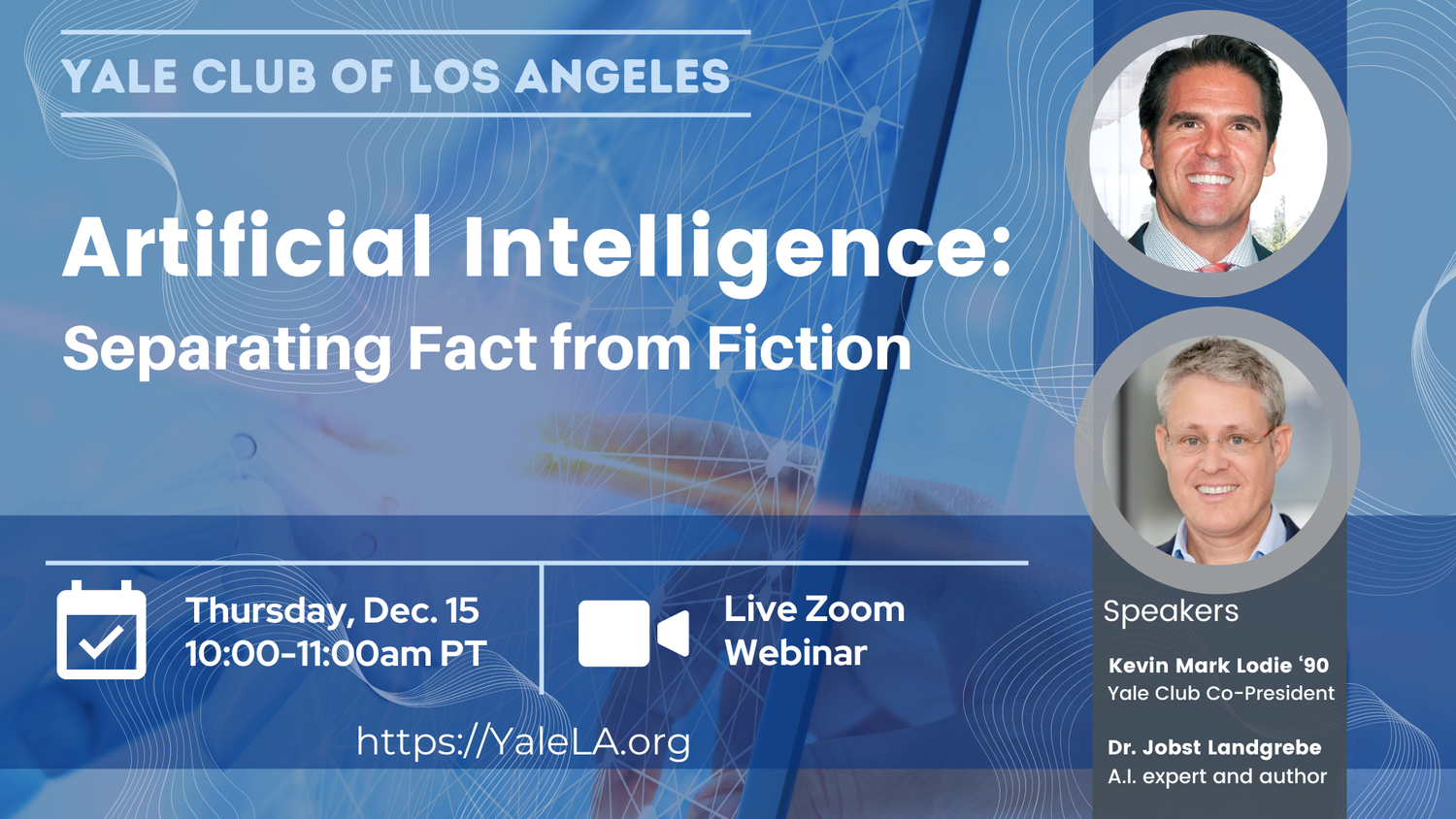

Artificial Intelligence: Separating Fact From Fiction

Talk held at the Yale Club of Los Angeles on December 15th, 2022

Notes from the Q&A session

Q. Do you think there is an upper limit to the IQ that an AI could score?

A. I’m certain it will never achieve a very high IQ because it doesn’t have the property that you need to obtain IQ which is really intelligence and so you really test it properly, like you test seriously in the military for example for officer positions and so on or in the CIA, then it will never achieve a good score. I mean you would need to alter the test batteries but if you subject it to the full testing procedure I’d predict it will never become very good.

Q. Along your comments earlier on the limitations of the end to end approaches: Personally I’ve been more of an advocate of using hybrid approaches. You might call them a combination between neural networks and either rules-based logic or some kind of model-based approaches such as camel filtering, for example, which as you pointed out was what NASA used and others, earlier on, for launches. The number one reason is that the work I do is mostly on natural systems which tend to be rather complex and so they have a combination of sort of stochastic variability and then other sources of variability with time or distance that you cannot simply capture because you can never sample enough in any reasonable amount of time to capture all that variability. The number two reason is: in many processes in many vectors, health is one you pointed out but also in industrial settings, an understanding of causation or at least some explanation of the causation is as important as correlation so it’s not good enough even if I had all the data in the world and something seems to correlate with something else in a first, second, or third order sense, it’s still not good enough because I cannot explain why that decision was taken, so you’d have to have some kind of audit trail of how you got to that point and how to back off from that. So that’s basically what I wanted to share.

A. It’s totally right. The deterministic part of the hybrid model so the compositional that you mentioned this is often based on causal knowledge, so that you have differential equations or partial differential equations or rules or first order logic. They all reflect our causal knowledge of the world and if you combine them with the stochastic, then you can achieve very powerful systems like the self-defense systems that now are put on all the battleships. They have exactly this property.

Q. [Regarding commerce applications of AI] Our customers tend to think about using AI and machine learning for next best action for the sales organization or for better marketing personalization content. I find myself often advising them to do very small but no-regret moves or steps that can’t be too big. Sometimes there’s internal politics and sometimes there needs to be baby steps. In the world of marketers and sales organizations, where would you think a pragmatic application is to be invested? Is it the data? is it the technology? And is it maybe movement from business rule sets to AI driven rule sets ? Any thoughts that are pragmatic for those who want to just dip their toe in the water and have some no-regret moves?

A. Three fields that work really well are: advertisement placing, next best action, and advice or recommender systems. Another trend which is I think very important for online sales is AI-based price prediction. The key is to find the right data and to use the data and to also use classical statistical learning. In the case of Google and Amazon, for some of their products, they can use neural networks; but they use just classical regression models… and classification models. You don’t need an additional neural network expert. You should start with a good old statistician who will then do variable selection and tell you which distributions you can work with. 95% of the customers or 99 % we see don’t have enough data to [use neural networks] so they should just use classical statistical learning and they can get very far with it. So that’s my advice.

Q: [Regarding Generative AI]: I’m a photographer and I’ve been using the mid journey and stable diffusion software to start looking at how Generative AI can evolve and change photography. Why [do you say generative AI] is hype? [Also, can you comment on] the training data that’s used?

A. OK, so generative AI works by setting up two neural networks that work against each other. One is generating image variance and the other is deciding whether the image is is an adversarial neural network which is filtering out which images can be used. It can generate really amazing effects. I but I think that it can’t be creative. It’s very hard to be creative as a photographer and the reason is that so much good stuff has already been tried out and now we have 100 years or more of history of photography so from the perspective of the theory of art, it’s getting harder and harder to be an original photographer. I mean being a good photographer is hard enough but doing something that’s original – that’s new, is very hard. Because these networks are trained on existing photos they basically try to imitate what has already been achieved. It can still be very impressive, but it can’t “create” because human creativity is the ability to find patterns that have not been seen by anyone and show them to the others. So if you look there are some paintings by Goya around 1800 which were impressionistic but nobody wanted to see them so Goya already invented impressionism but it was it was so early that nobody was interested. Only 100 years later. So to be creative you need to see something new, but [with generative AI] it needs to fit to [a pre-existing pattern]. So these machines create impressive results but you won’t find real creativity.

Q. I work in creative for broadcast and digital production for large brands through advertising agencies. In the music industry, there’s a lot of playing around with the use of AI because it’s cool and it’s interesting and creative. I’m wondering whether you have seen any particularly interesting kind of weird/unusual uses of AI in advertising or in the creative industries like music?

A. There was a cool use case published in Ad Age years ago. They took pop music since the mid 50s and put it into a phase space – the kind of mathematical coordinate system that I explained at the beginning – and then they checked for innovation. With pattern recognition, they found that the last innovation in music was early 80s hip hop. And that everything that happens in pop since then is just recombining linear combinations of elements that got invented before. I always thought [pop music] wasn’t evolving anymore and then it was mathematically shown within an algorithm that that’s true. I found this fascinating that that’s why I like pattern recognition so much which I talked about a bit earlier in the case of the battlefield data. You can find things that confirm your hypothesis or that show your new way of looking at things and so this is a great example of how men and machine can co-work. But one should not expect that it can be used to create something new. But it can certainly find interesting patterns and it can of course be used for evaluation. For example: if certain effects used in movies will be acclaimed by the masses or not. So there are many use cases that can work, but one has to really carefully design them because fashion can change you know the perception can change.

Q. In discussions of the “Great Filter,” intelligent AI often comes up as the end of humankind because it will become self-aware, replicating and improving itself to the point that it may decide that humans are a waste of energy. I just would like your comment on our thoughts about that.

A. (Barry Smith) [On Drivenness.] In our book, under the heading of the Machine Will… Machines cannot want anything. The only kind of wanting that they can have is the kind of wanting that you can simulate by making a machine, for instance, play a game over and over again billions of times and it learns how to win, but it never learns how to want to win. It just learns how to win algorithmically. There is no case where a machine wants. [So, if a machine is] going to take over the Galaxy, the machine is going to have to want tremendously powerful things. But this is impossible and humans can always pull the plug on a machine. A machine can merely compute what it is told to. This is connected also with creativity. …. In the case of Bartok: Bartok showed how you can write really original creative music not by inventing something new but by combining European classical music with gypsy music. But he had a will to do this in a creative way and that’s why we find Bartok a great composer. So that’s the missing ingredient which the AI world will never ever be able to replicate.

(Jobst) In addition to what Barry said, the core reason why machines will not become truly intelligent is that to do this we would have to create a mathematical model of intelligence. But we only can observe intelligence externally.

Intelligent behavior is the ability to find solutions to unseen problems without being trained. [We can find solutions] spontaneously. This is what we observe in animals and in humans. It also comprises the ability to verbalize this. To create language patterns about this which we call “objectifying intelligence” and this we can observe, but we don’t know how it’s achieved, and I think we will never know this. If we can’t [understand how this is achieved], we can’t model it mathematically, then we can’t build it because just by trying it out we won’t be able to build it. So there is trial and error in engineering but there always needs to be a core mathematical model. For example, electromagnetic force which was discovered by Faraday and then used to build the electric motor and the generator (and they are basically the foundation of the industrial revolution). Both of these were built by trying out. The principle was mathematically understood. Then you can do trial and error. However, to get a generator, you need to understand the EMF principle mathematically first and this is always the case with what we engineer. We need to understand the basic mathematical principle to be able to engineer, this is true for nuclear fission, nuclear fusion or the quantum computer: We always have to have a very good mathematical model first and then we can build the thing and if we don’t have it, trial and error won’t succeed. Without a model for understanding nuclear fission that was obtained in the 20s and 30s, the Manhattan Project couldn’t have worked and it is always like this and therefore we don’t have to be afraid of the machines taking over.

Q. [Ultimately, AI is] disruptive technology … there’s always the question of adoption. What are some of the obstacles to introducing working and realistic AI?

A. I see how companies are struggling to use AI and why are they struggling. The main reason is that the data usage pattern they have is transactional data that’s stored in a database. Then they shuffle those transaction data into what they call business intelligence data warehouse and then they work with this data in the data warehouse. This is what Google for example is good at. But what companies who have adopted AI or co-evolved it are not good at is using the data in the feedback loop to change the transactions. That’s what you need to do when you want to use AI in any production system or business process. You cannot take the data output and feed it into a BI (business intelligence) system and then work offline with them to get insights. You need to have algorithms that work with transactional data permanently and feed them into the decisions and what’s going on the activities of the business process life and that requires a lot of changes in the IT infrastructure.

It’s a mindset change focused on the IT infrastructure – the way the systems need to be set up and talk to each other. It’s very challenging if you have an old-fashioned IT landscape of unconnected systems. [So one of the main obstacles involves this paradigm shift] that requires you to rethink your IT architecture in a very fundamental way. That’s why so many startups which are successful because they start [from scratch] without this whole legacy of degenerated IT systems that started as logic systems but have become complex systems because humans and machines have interacted so much with them. It’s like traffic in the center of Milan. For many companies, it doesn’t matter so much, but there are companies for which it’s a make or break. If you’re a manufacturing company and don’t get it into your assembly line, you’re gone in 10 years. For other companies like good old life insurance, it’s so hard to get them to introduce AI but then their business is so stable they might make it much longer. But for businesses that are fast-moving and where productivity increments are crucial, it can become tough if you are if you fail to introduce AI [and upgrade or rebuild your IT infrastructure].

Q. (Comment in Chat) Pattern recognition is the core of cognition and learning. Could you give an example of how AI’s prowess at pattern recognition is different from and would interact with the more human modes of cognition and learning such as lateral thinking or the synthesis of disparate ideas in new and unique ways?

A. I think a very good way of explaining this astronomy. If you look at another Galaxy, you just see a huge incomprehensible pattern of light points. If you use pattern recognition, you can find the rotation of a Galaxy which you could never see with your naked eye. But to interpret what this means, you need original thinking. In sciences that have dealt with a lot of empirical data or in combinatorial chemistry where you have a lot of data, the human brain is drowned out by the data points, but once you apply pattern recognition you see a pattern. The machine doesn’t understand the pattern, but the patterns can be interpreted by human beings and then new ideas can be generated. I think this is this is what happened in astronomy and that’s why astrophysics developed a lot of pattern recognition algorithms. The main improvement with neural networks is that when you have enough data, the recognition that can be done via the middle layers of the neural network are quite astonishing. This is really something to look for. Instead, many people rather look for services like sales and marketing or automation like in claims management. What’s underutilized is pattern recognition. It’s very demanding but the pattern recognition that can be done with neural networks is totally nonlinear. Whereas the old style pattern recognition relies a lot on linear algebra, now you have non-linear pattern detection. With the new clustering algorithms that come out of neural networks. you can identify more demanding patterns than before, so I think this is really a super exciting area.

Q. You seem to suggest that there’s an absolute barrier between artificial intelligence and being able to understand human intelligence. To the extent that human intelligence itself is a consequence of physical and chemical interactions, why would it be impossible to model artificial intelligence to duplicate human intelligence

A. Barry and I both believe that the human brain works in a completely causal fashion right the way you described it so it’s all electronic interactions. But although there is causality, the complexity is so high that we cannot create mathematical models. Our mathematical modeling tools are not able to capture the full complexity of such a system. [Consider the case of turbulence]: If I take this this this bottle of water and now pour water into the glass like I’m doing now, I create turbulence in the glass. Although it’s quite a simple process, turbulence cannot be modeled mathematically because the complexity is too high. Mathematical physicists have given up for a good reason because one can’t write down the perennial equations that take into account dissipation and that process of energy transformation. Although it’s causal and we know the basic laws perfectly, we can’t get a mathematical model. It’s the same with smoke from a cigarette. There’s turbulence that we can’t model. The brain is even more complicated. So we just have to accept and that there are phenomena which are beyond our modern capabilities We are confounding our ability to create logic systems with our ability to explain complex systems.

(Barry) [You may be familiar with] the three body problem or the four body problem… When we’re dealing with predicting the causal flows in the brain when people think, we’re dealing with a trillion body problem. There are so many molecules in the brain and we don’t know which are involved in any given brain activity but we know that there are a lot of them and we’re not going to be able to track them by using mathematical equations which we can’t even get close to formulating much less solving.

Q. Could you further explain why the proposition you’re putting forward is something that has longevity rather than something that is a function of current capabilities?

A. That has to do with the nature of mathematical thinking. There has been a huge revolution mathematical thinking in the middle of the 17th century… Nevertheless, we see that mathematics as a science, despite its beauty and complexity, is very hard to bring to bear on real nature. So, whenever you read a physics textbook, whether the domain is classical mechanics, quantum mechanics, quantum field theory, etc. – when the physicist starts to describe the natural system, the physicist makes a huge set of assumptions about the system and simplifications of the system until …basically what is then being modeled is not what’s going on in real nature but a very highly idealized form of what’s going on. Therefore, the whole way that physics describes nature is partial. For example, the Heisenberg equation is only valid for very few particles and the same is true for the Dirac equation which deals with photons. So what I am saying is that if how we explain nature is always by simplifying/abstracting massively, this seems to be the limitation of our way to think. [You could speculate] that the human brain could change in a totally radical way so that suddenly we can create partial differential equations with 10 thousands of variables and solve them, but even then the brain is so much more complicated that I think we still wouldn’t be able to model mathematically. The discrepancy of what we can model and what we can’t is so huge and all the good physicists recognize this. In all the texts, from Newton to Richard Feynman, you always find statements of very strong modesty. What we are best at is creating technology for which we chose ourselves which aspects of the laws of nature we want to exploit. We can then create deterministic, logic systems. Sometimes they can get out of control like Harrisburg, Chernobyl or Fukushima. These were logic systems which got out of control which has to do with a lack of control over their drivenness, they way that energy flows through the system. They then became chaotic, but basically their normal mode when they’re under control is to function as logic systems.

When we engineer systems, we can do a lot with mathematics and physics but when we look at real nature and its phenomena, we are helpless. For example, if we look seriously at how a cloud creates lighting, it’s a phenomenon we don’t understand, and this is just one of many examples. [This modesty is lacking today, especially among those who don’t fully understand these phenomena.] I don’t know any good physicists who believe that general AI with problem solving capabilities at the level of a mammal can be done.

It’s often engineers who have a more superficial understanding who tend to believe in the feasibility of AGI.

The really good theoretical physicists I know and who [think deeply] about the problem like Roger Penrose and many others would say AGI is unfeasible.

Vor welcher Herausforderung

stehen Sie? Schreiben Sie uns.

Dr. Raija Kramer

info@cognotekt.com

+49 221-643065-10